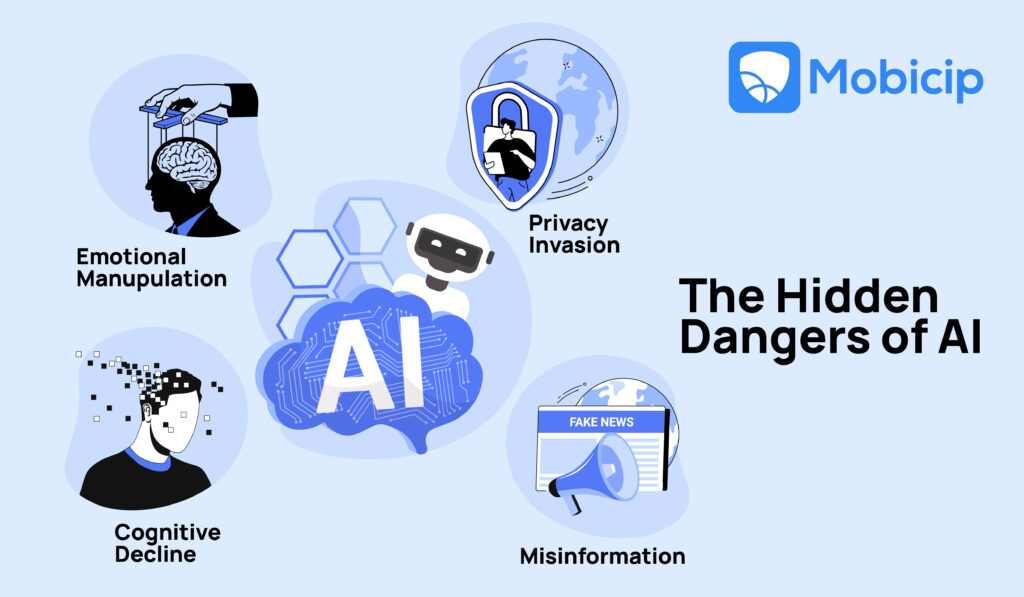

The Hidden Dangers of AI

As AI becomes more embedded in education, entertainment, and social interactions, it brings with it a range of risks that many parents may not immediately recognize. These include exposure to harmful content, emotional manipulation, privacy breaches, deepfake exploitation, misinformation, and the erosion of critical thinking and social skills. Read on to know more.

Introduction

Last week, I was in an appliance store and found myself bewildered at the rows of refrigerators and washing machines solemnly declaring their inner workings to be a product of AI. I tried escaping to the air conditioner aisle only to find that the wind hadn’t been spared either. At this point, AI isn’t just everywhere—it’s everything, everywhere, all at once, with none of the Oscar wins. At least, not yet.

Indeed, children aren’t exempt from this wide spread of AI algorithms. As this technology steadily weaves its way into their academic and personal lives, it becomes necessary for parents to understand and tackle the AI risks. While these technologies open avenues for convenience and learning, they also open the door to a host of risks that can be easy to miss.

This article explores six key AI risks to children:

- Exposure to Inappropriate or Harmful Content via AI Algorithms

- Emotional Manipulation and AI Companionship

- Data Privacy and Surveillance Risks

- AI-Driven Grooming and Deepfake Exploitation

- Misinformation and Biased AI Responses

- Undermining of Social and Cognitive Development.

It then examines how parents can harness the benefits of AI while keeping their children safe from AI risks. It discusses how parental control platforms like Mobicip can help families navigate the challenging domain of digital safety.

AI Risk #1: Exposure to Inappropriate or Harmful Content via AI Algorithms

Have you ever felt uncomfortably known by the websites you frequent? That’s probably the recommendation engine. Recommendation engines and algorithms drive the Internet today; from e-commerce websites to social media platforms, they tailor content to a user’s preference. They are also largely optimized for user engagement. Built on techniques like collaborative filtering (which suggests content based on similar user preferences), content-based filtering (which analyses item attributes), and hybrid filtering (a blend of both), these algorithms have long come under fire for compromising user safety through their promotion of harmful content and creation of online filter bubbles or echo chambers.

A recent study by University College London and the University of Kent observed that TikTok algorithms were contributing to the spread of misogynist ideology amongst young people, with algorithms “bombarding” the vulnerable with radical content. Lawsuits like Gonzales v. Google – accusing Google of helping radicalise individuals with ISIS propaganda – and Twitter Inc. v. Taamneh – accusing Twitter, Google, and Facebook of not curbing terrorist content – further illustrate the public’s disenchantment with algorithmic personalisation.

In addition to their potential for spreading harmful content, the erosion of young users’ ability to discover information independently is yet another pitfall of excessive online curation. Such loss of critical thinking is especially concerning considering the rise of AI-generated misinformation and harmful content. Examples of AI-generated content that often bypasses filters include deepfake cartoons and fake educational videos.

How Mobicip Can Help

Mobicip equips parents with practical tools to reduce the risks posed by harmful algorithmic content. Its content filtering system blocks access to inappropriate websites, videos, and apps, helping to create a safer digital space for children. Filters can be customized based on age, maturity level, or specific categories of concern. Parents can also monitor browsing history and screen time to stay aware of their child’s digital activity. Mobicip’s YouTube monitoring feature allows visibility into trending videos, making it easier to spot red flags early. Together, these features support safer screen use and promote more thoughtful, independent media habits.

AI Risk #2: Emotional Manipulation and Dubious Companionship

The increasing sophistication of AI-powered chatbots and virtual companions raises concern around the emotional bonds formed with such systems. Platforms like Replika and Character.AI that create personas mimicking human behaviour to converse with users exemplify this danger.

The phenomenon of humans projecting human-qualities onto machines is a tale as old as time. Or at the very least, a tale as old as the 1966 with the psychotherapist chatbot-inspired ELIZA effect. The ELIZA effect illustrates the innate tendency of humans to anthropomorphise and form an emotional attachment to interactive programs. This effect often impacts children and teenagers more strongly. In this 2007 study, for example, Austrian researchers observed children interacting with a robotic dog named AIBO. The children treated AIBO as a sentient companion, believing it could feel sadness or happiness. Most of the children further indicated signs of emotional attachment to the dog, accepting it as a playmate.

Such cases of attachment to machines could foster emotional dependency on such systems. This in turn could affect children’s communication skills and ability to form genuine human bonds. Overreliance on AI interactions could further lead to a sense of isolation and detachment from reality. Recently, in Orlando, a 14-year old boy grew attached to an AI avatar on Character.AI, and eventually took his own life. His parents sued the company, accusing its chatbot of worsening their son’s depression and encouraging his suicide.

It is important for parents to understand the psychological risks posed by AI companionship at a vulnerable age. Educating them on the function and purpose of such apps and setting healthy boundaries and time limits could help in keeping them grounded to the real world.

How Mobicip Can Help

Mobicip allows parents to set healthy digital boundaries that can limit children’s exposure to emotionally manipulative AI companions. Its app blocker and screen time management features can restrict access to AI chat platforms like Replika or Character.AI. Mobicip also provides activity reports. This can help parents stay informed about the time their child spends on specific apps.

AI Risk #3: Data Privacy

Returning to the earlier point about feeling uncomfortably known by websites: AI-powered apps, toys, and platforms often collect and store vast amounts of user data, raising concerns about children’s privacy and safety. Such data is often used for profiling and targeted advertising. The data could include personal information – such as name or age, user preferences, and even voice-recordings, images, and location.

IBM classifies the AI risks involved with the collection of user data as follows:

- Collection of sensitive data: The collection of sensitive information such as biometric or financial details.

- Collection of data without consent: User data is collected without the knowledge or permission of the user.

- Use of data without permission: User data is used for purposes that the user did not consent to. This may entail the nonconsensual sale of data to third parties, nonconsensual use of data for AI-training, etc.

- Unchecked surveillance and bias: Users are placed under indiscriminate surveillance. This is worsened by AI-bias potentially leading to unjust outcomes.

- Data exfiltration: The theft of user data by malicious actors.

- Data leakage: The accidental exposure of user data due to factors such as faults or gaps in the system.

In addition to such classic AI risks, the concept of informed consent in data collection turns murky when examined closely. Privacy policies and terms of use are often exploitative and difficult to understand. This study, which describes them as “hard to read, read infrequently, and unsupportive of rational decision-making,” estimates the national opportunity cost of reading the fine-print at $781 billion, pointing to the AI risks faced by users in making informed choices.

To safeguard children’s privacy, it is necessary to educate them on digital footprints and the importance of not sharing non-essential information with sites and platforms.

How Mobicip Can Help

Mobicip offers tools that help reduce the risk of children encountering AI-generated deepfake content or grooming attempts. Its advanced web filtering blocks inappropriate websites and harmful content, including deepfake pornographic material, before kids can access them. Parents can monitor search terms, flag concerning behavior, and restrict access to anonymous chat apps where grooming often begins. Mobicip keeps parents aware and in control of their child’s digital world, making it safer from AI-driven online threats.

AI Risk #4: AI-Driven Grooming and Deepfake Exploitation

AI-generated content and deepfake technology are increasingly being used to facilitate the exploitation of children. In 2023, the Internet Watch Foundation reported over 3000 AI-generated images depicting instances of CSAM (Child Sexual Abuse Material). Since then, these AI risks haven’t gotten any better. In a 2024 Guardian article, experts warned that AI-generated explicit imagery was overwhelming law enforcement agencies. This surge in fake content makes it harder to identify and protect real victims of child exploitation. AI may also aid online grooming by creating fake identities and generating tailored, convincing responses to children.

How Mobicip Can Help

Mobicip offers tools that help lower the risk of kids encountering AI-generated deepfake content or grooming attempts. Its web filter blocks inappropriate websites and harmful content, including deepfake pornographic material, before children can access them. Parents can monitor search terms, flag risky behavior, and block anonymous chat apps where grooming often starts. The software It actively monitors and minimizes threats like AI-driven exploitation in real time.

AI Risk #5: Misinformation and Biased AI Responses

Despite the academic assistance chatbots and generative AI systems can provide with their capacity for diverse, tailored responses and command of human language, they may not always be reliable sources for primary research. These tools have been found to frequently hallucinate – a phenomenon in which they generate inaccurate or misleading information. OpenAI observed that its GPT o3 model hallucinated a third of the time while its o4-mini model did so 48% of the time. Such error rates vary across models and fields; this study, for example, noted that when asked specific questions about federal court cases, GPT-4 hallucinated 58% of the time while Meta’s LLaMA 2 did so 88% of the time.

These high hallucination rates are not the only concern—generative AI systems can also perpetuate harmful social biases. Generative AI systems can reinforce harmful biases due to training on biased data, leading to biased and unfair output. For example, image generation tools like DALL.E 2 and Stable Diffusion have displayed gender and racial biases. Prompts like “software developer” often generate white men, while “cook” or “flight attendant” yield women or people of color. These patterns can shape children’s views and reinforce stereotypes unless adults actively guide and question AI outputs.

Such unreliability makes AI tools risky for children who may use them carelessly for homework and school projects. With just over half of young people in this study between the ages of 14 and 22 reporting having used GenAI at some point and over half of high school students reporting its use for assignments, it is important for parents to be aware of the limitations of AI and encourage its responsible use. Inculcating critical thinking and the habit of fact-checking information in children could help them be more discerning.

How Mobicip Can Help

Mobicip can support parents in guiding their children toward responsible use of AI tools for learning. With Mobicip’s app monitoring and screen time controls, parents can ensure that children are not over-relying on generative AI for academic work or unknowingly accepting false information. The platform also lets parents set educational filters, block specific AI apps or websites, and track browsing history, enabling them to start informed conversations about what their children are reading or believing. Used thoughtfully, Mobicip can help children develop healthy digital habits, including fact-checking and cross-referencing information—key skills in an AI-influenced academic world.

AI Risk #6: Undermining Social and Cognitive Development

The Disney movie WALL-E depicts a dystopian future where humans have regressed; stagnant and complacent, they exist dependent on the automated systems around them. While perhaps the comparison is a stretch or a cliché, it is true that overreliance on AI for entertainment, answers, or companionship can hinder a child’s cognitive and social development. Although AI tools can assist learning and boost efficiency, their benefits depend on the context in which and degree to which they are used. As Assistant Professor Ying Xu explains in the Harvard EdCast podcast, while AI can “scaffold” learning, this can prove a double-edged sword, with children not being given the opportunity to deepen their understanding through the process of “productive struggling.” She further notes that despite AI’s benefits, it can’t fully replicate real conversations.

Indeed, children who constantly rely on AI risk losing the ability to think creatively, critically, and independently. A 2024 study observes that while AI can enhance productivity and streamline tasks, its overuse often led to a reduction in critical and analytical thinking skills as well as an increase in complacency and undue dependence. The study also notes the potential for reduced creativity and capacity for understanding.

To counter these AI risks, it is necessary for parents to investigate AI that facilitates rather than substitutes learning. For example, Xu recommends AI that encourages human connection, citing her own team’s Sesame Street-based AI, which prompts parents to engage with their child during reading. It is also necessary for parents to provide their children with a balance between screen-time and offline activities.

How Mobicip Can Help

Mobicip helps parents set screen time limits to encourage a healthier balance between AI use and real-world activities. They can block distracting or unproductive apps and schedule offline time for study, play, or family moments. This prevents children from becoming overly dependent on AI tools for thinking, learning, or socializing. Mobicip’s activity reports show how much time is spent on different platforms, giving parents valuable insight. With this information, parents can guide children toward more meaningful and age-appropriate digital habits.

What Parents Can Do to Combat AI Risks

There are several key steps parents can take to guide their children in using AI responsibly. Parents can:

- Talk openly about AI risks. It is important to educate children on the common pitfalls of AI and teach them to verify information.

- Encourage independent thinking. It is important to ensure that children develop essential skills and learn not to over-rely on automated systems. Keeping up with school guidelines on AI-use and examining the context in which children attempt to use certain types of AI could help parents ensure its appropriate use. While a calculator is undoubtedly helpful, its use by a six-year-old learning addition for the first time renders the point of the lesson moot.

- Set digital boundaries by teaching children to compartmentalize and use AI as a purely assistive tool in the completion of a particular task. Encourage them to spend time offline interacting with people and engaging in recreational activities – to touch grass, as Internet slang would put it.

- Use parental control tools such as Mobicip for filtering, monitoring, and screen time.

- Stay informed about emerging AI trends. The ever-evolving nature of the digital world brings with it a need for parents to keep up with new and popular forms of technology. Understanding what they’re dealing with helps parents set appropriate and relevant boundaries.

Conclusion

AI is everywhere—slipping into homework help, bedtime stories, and even toothbrushes that claim to “learn” your child’s brushing habits (which sounds more unsettling the longer you think about it). While some of it is clever and convenient, not all of it is harmless. As parents, the goal isn’t to keep our kids away from technology, but to help them grow up knowing when to use it, when to question it, and when to just go outside and get muddy.

That means staying involved, asking questions, setting boundaries—and yes, using the right tools. Parental control platforms like Mobicip can help bring some sanity to the digital chaos, offering parents a clearer view of how their children are spending time online. With a little curiosity, a dash of scepticism, and a lot of conversation, we can raise children who aren’t just good with tech—but better without it when it counts.