Why TikTok’s “For You” Page Is a Risk to Your Child’s Mental Health

If you’re a parent, chances are your child spends more time with TikTok than with you. Ouch!! but it’s true. TikTok has exploded among kids and teens, with over 67% of U.S. teens using the app daily according to Pew Research Center. And the crown jewel of TikTok? The “For You” page (FYP). It’s a personalized, never-ending feed powered by algorithms that know your child better than you do.

And while that sounds impressive, here’s the kicker: the same algorithm that recommends cute dog videos can also trap young users in dangerous “rabbit holes” of depression, anxiety, and harmful advice. Or as Mark Twain once quipped, “A lie can travel halfway around the world while the truth is still putting on its shoes.” On TikTok, that lie is traveling at 5G speed. This article uncovers how TikTok’s “For You” page can quietly become a risk to your child’s mental health.

How the “For You” Page Works

TikTok’s recommender system is like a super-intelligent (and slightly nosy) friend. It watches every scroll, like, pause, and replay to build a personalized feed. Harmless, right? Not exactly. Once your child engages with one type of content, say, “body positivity” then the algorithm often escalates it to extreme dieting tips, toxic body comparisons, and before you know it, “thinspiration.”

Amnesty International found that TikTok can flood a teenager’s feed with self-harm and eating disorder content within minutes of engagement. That’s not personalization; that’s weaponized curiosity.

The Mental Health Impact of TikTok’s Algorithm

Brains under construction otherwise known as teenagers’ brains are especially vulnerable. Constant exposure to emotionally charged videos rewires thought patterns, reinforcing anxiety and depressive tendencies.

Worse yet, a study from the British Psychological Society revealed that over 80% of mental health advice on TikTok is misleading or inaccurate. Imagine a child turning to TikTok for help with depression, only to be told to “just manifest happiness” or “try this juice cleanse.” That’s not therapy; that’s digital snake oil.

What Children Are Actually Seeing

Let’s get real: TikTok isn’t just dancing videos anymore. Kids are exposed to:

- Suicide romanticization disguised as “deep” content.

- Toxic body image pressures amplified by influencers.

- Extreme dieting tips that are closer to starvation plans than healthy dieting.

- Toxic masculinity trends teaching boys that emotions are weakness.

- Influencer-driven misinformation (because if someone with 2 million followers says it, it must be true, right?).

Even when content doesn’t technically break guidelines, it can still plant harmful seeds in impressionable minds.

Why Children Are Especially at Risk

Children and teens are still developing critical thinking skills. Their ability to spot manipulation is limited, and their emotional regulation is fragile. TikTok exploits this by designing a system that maximizes engagement even if that means maximizing harm.

Think of it like giving a toddler a casino membership. The odds are stacked, the environment is addictive, and the house always wins.

The Role of Data Collection and Surveillance

TikTok isn’t just feeding your child videos; it’s feeding on your child’s data. Every click, swipe, and pause refines the algorithm further, creating a feedback loop that pushes kids deeper into harmful niches.

Amnesty International’s Algorithmic Transparency Institute warns that this type of surveillance-based personalization not only endangers mental health but also strips away autonomy users think they’re choosing content, but in reality, the algorithm is choosing for them.

Are Safety Measures Enough?

TikTok is quick to highlight its built-in safety features such as content filters, family pairing options, screen-time reminders, and even tools to refresh the “For You” page. On paper, these sound like robust safeguards. In practice, however, they often serve more as public relations talking points than effective protections.

The truth is, these measures do little to counter the power of TikTok’s core engine: the recommendation algorithm. That algorithm is designed to maximize engagement, not well-being. Even with filters in place, kids can still be exposed to borderline content that doesn’t technically break TikTok’s rules but still plants harmful ideas like subtle body-shaming videos, glamorized portrayals of self-harm or “wellness” tips that veer into dangerous territory.

Amnesty International’s findings make this painfully clear: harmful content slipped through despite all these protections supposedly being in place. That means TikTok’s guardrails aren’t just weak, they’re fundamentally mismatched to the scale and speed of the problem.

It’s like putting a “wet floor” sign in a hurricane, technically safe, practically useless.

Proactive Digital Parenting with Mobicip

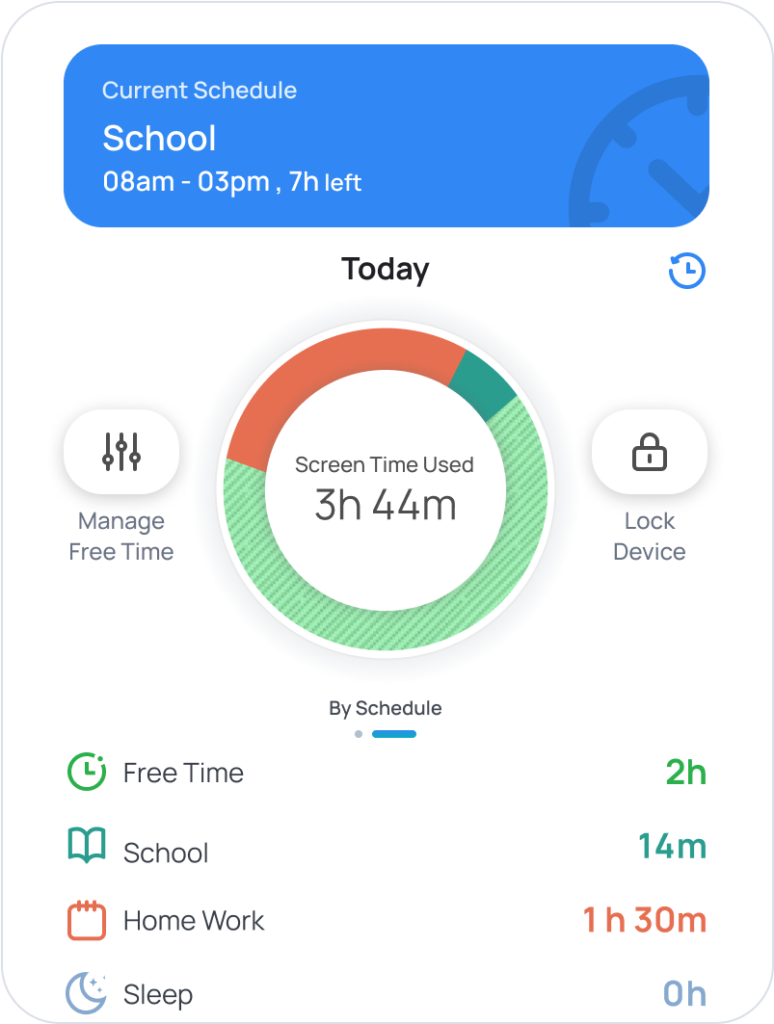

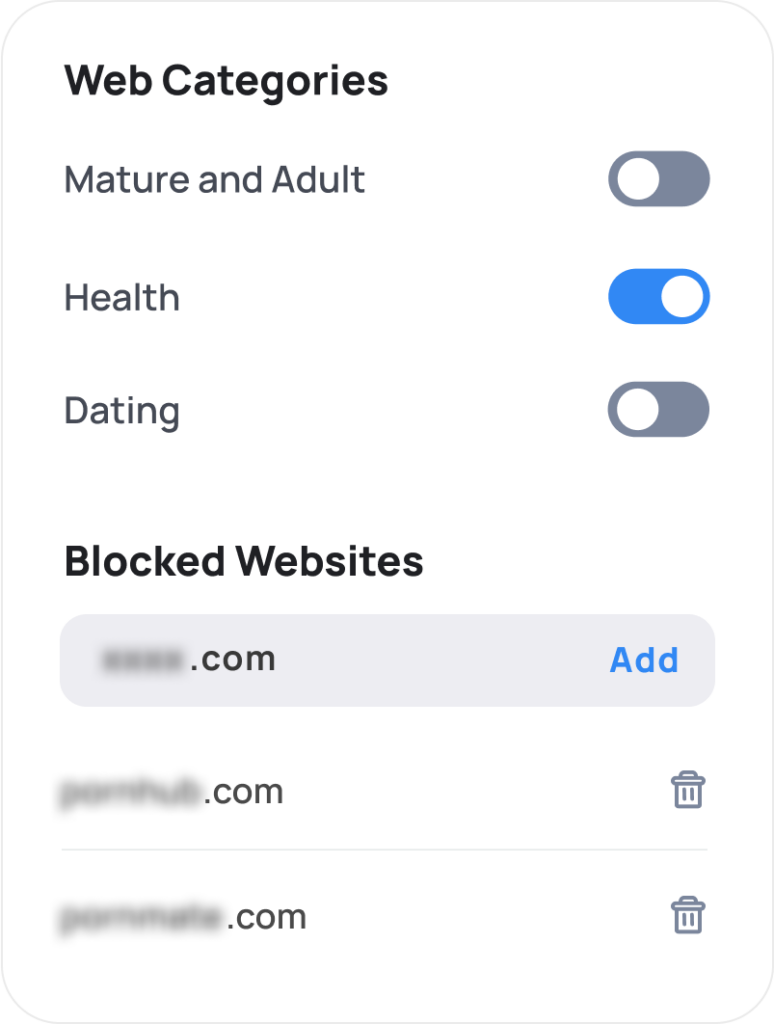

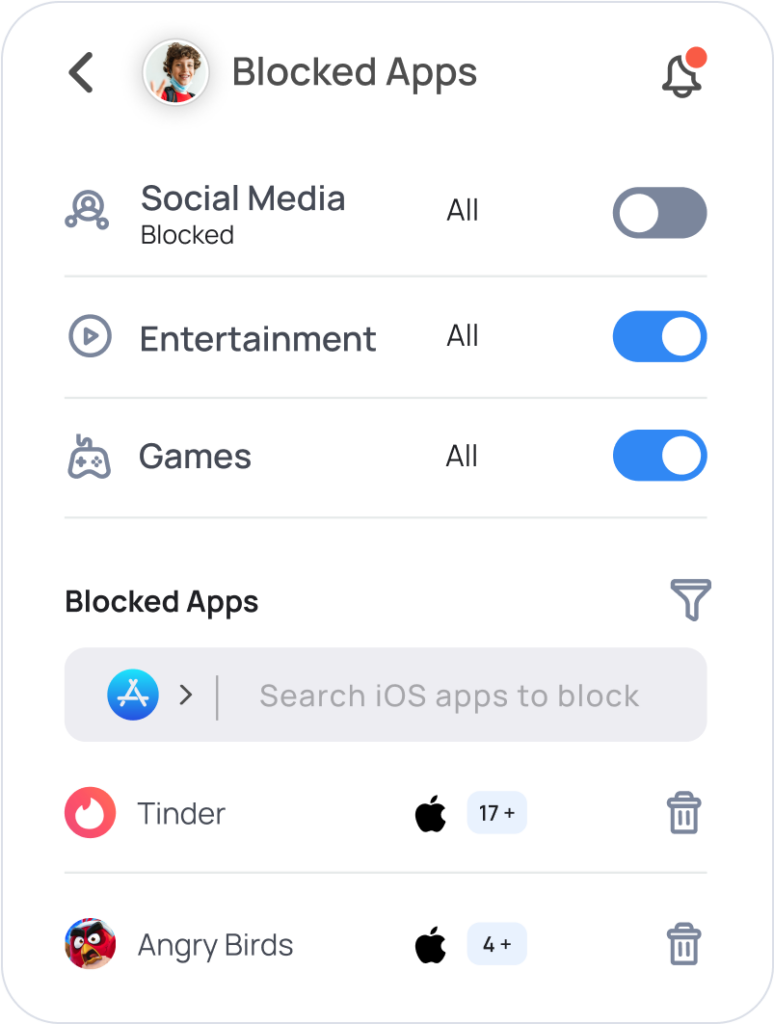

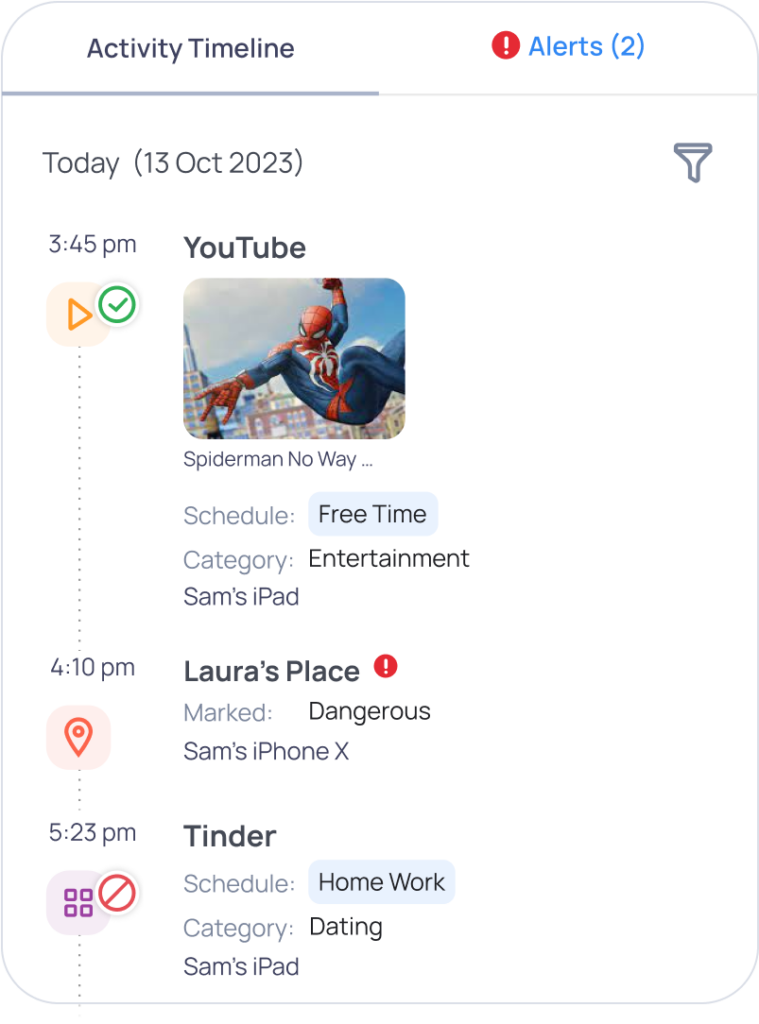

So what can parents do? Enter Mobicip, a parental control and digital safety platform trusted by families worldwide. Mobicip helps parents:

Monitor and limit screen time.

Filter harmful websites

Block harmful Apps

Monitor their browsing history

At its core, Mobicip’s mission is simple yet powerful: to empower families to navigate the digital world with confidence and peace of mind. It’s not about replacing your parenting instincts with technology, it’s about giving you the right tools to support them.

Conclusion

At the end of the day, TikTok’s “For You” page isn’t going anywhere—but your child’s well-being should always come first. The same feed that serves up laughs and dance trends can also push harmful ideas faster than most kids are equipped to handle. That’s why the real solution lies in balance: staying involved, talking openly about what they see online, and using tools like Mobicip to add a layer of protection. When parents combine guidance with smart digital boundaries, kids don’t just survive the algorithm—they learn to thrive beyond it.